Unit Testing Azure Functions in Isolated Environment

Let’s see a case study for how JustMock lets you automate testing for a typical microservice architecture (including mocking Entity Framework).

Even today, the cases studies used in examples of automated testing usually ignore both the cloud environment and modern microservice architectures. So, this post will look at how JustMock lets you automate testing for a typical microservice architecture (including mocking Entity Framework).

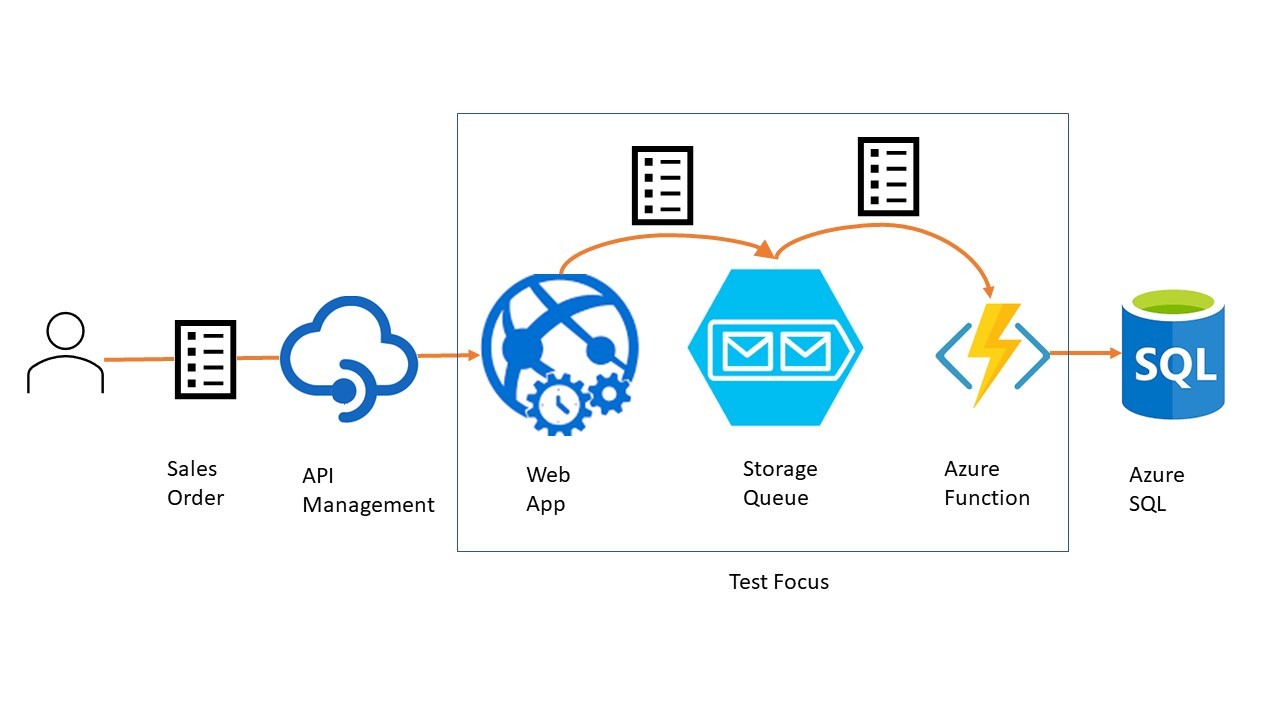

For my case study, I’m going to implement the Provider/Consumer pattern—it’s a popular pattern that provides both high scalability and reliability. This implementation of the pattern uses a Web API frontend (the Provider) to accept requests and an Azure Function backend (the Consumer) to process those requests. The Provider writes requests to an Azure Storage queue and the Consumer reads and processes those requests as they appear in the queue.

Case Study

For my case study, my web service accepts SalesOrder objects from clients and writes the objects to a Storage queue (the client just gets back an HTTP 202 Accepted status code). This takes virtually no time at all, so this Web API application can handle a very large number of requests in any period of time.

In .NET Core (or, really, any version of ASP.NET Web API), that part of my Azure microservice would look something like this:

In .NET Core (or, really, any version of ASP.NET Web API), that part of my Azure microservice would look something like this:

[HttpPost]

public IActionResult Post(SalesOrder so)

{

//…any validation/processing code…

string jsonSO = JsonSerializer.Serialize<SalesOrder>(so);

QueueClient qc = new QueueClient(

new Uri("…URI for queue…"),

new StorageSharedKeyCredential("…accountName", "…ugly encrypted key…"));

qc.SendMessage(jsonSO);

return Accepted(so);

}

The Azure Function that acts as the Consumer in this implementation of the pattern is wired up to execute any time a message appears in the queue and is automatically passed the body of the message. The function will take more time to process the SalesOrder it reads from the queue than the Web Service took to write the SalesOrder to the queue—among other tasks, the Consumer is going to be handling all the database updates using Entity Framework.

In peak times, the Consumer will fall behind and the number of SalesOrders sitting in the queue, waiting for processing, will increase. Over time, though, when demand on this microservice drops off, the Consumer will catch up, eventually clearing the queue (alternatively, I could start another copy of the Consumer to clear the queue faster, further improving scalability). If the Consumer should fail, the messages will sit in the queue until the Consumer is restarted, providing a high degree of reliability.

When a message appears in the queue, Azure passes the body of the message and a reference to a logging tool to the function. An Azure Function that processes that message and, eventually, adds it as a SalesOrder to the database, would look something like this:

[FunctionName("SalesOrderProcesser")]

public static void Run(

[QueueTrigger("salesorderprocessing", Connection = "salesorder")] string myQueueItem, ILogger log)

{

try

{

SalesOrder so = JsonSerializer.Deserialize<SalesOrder>(myQueueItem);

log.LogInformation($"C# Queue trigger function processed: {so.SalesOrderId}");

//…processing code…

SalesDb db = new SalesDb();

db.SalesOrders.Add(so);

db.SaveChanges();

}

catch (Exception e)

{

log.LogError($"Error in processing: {e.Message}");

}

}

My first step in creating automated tests for these components in Visual Studio is to add a test project to the solution that holds them, using the project template that’s installed with JustMock. Once that’s in place, I can start writing my tests.

Testing the Provider

For the Provider, the critical test is to ensure that the right thing gets written to the queue—if that doesn’t happen or goes wrong, then everything downstream of the Provider is going to fail.

To start the test, I need a mock SalesOrder object to pass to my Web Service’s Get method. The code to create that is pretty simple:

public void SalesOrderProviderWriteTest()

{

SalesOrder so = new SalesOrder

{

SalesOrderId = 0,

CustomerId = 112,

//…more properties…

};

Next, I need to mock the QueueClient’s SendMessage method so I can capture what gets written to this queue. First, I instantiate a QueueClient object to use when configuring my mock SendMessage method. I’m not going to be using this QueueClient for anything other than setting up my mock methods, so I pass it dummy values, like this:

QueueClient qc = new QueueClient(new Uri("http://phv.com"),

new StorageSharedKeyCredential("xxx", "bcd=")

);

With that in place, I use JustMock’s Mock class to provide a mock version of the SendMessage method that, instead of writing to the queue, updates a variable in my test. I can then check what ends up in that variable in my Assert statements at the end of the test to see if the Provider did the right thing.

After setting up my variable, there are three steps to mocking SendMessage (though, thanks to JustMock’s fluent interface, it all goes into one statement).

First step: Using the Arrange method on JustMock’s Mock class, I call the SendMessage method I want to mock from the QueueClient object I’m using as my base. The SendMessage method expects a string parameter so I use one of JustMock’s matchers—Arg.IsAny() in this case—to make SendMessage happy.

Second step: This isn’t the QueueClient object that’s used in my Provider’s Get method (that method creates its own QueueClient object). However, if I use JustMock’s IgnoreInstance method, any mocking I do to the object I create here will also be applied to any instance of QueueClient created within the scope of my test.

Third step: I use JustMock’s DoInstead method to provide a replacement for the real SendMessage method. My replacement is a lambda expression that, like SendMessage, accepts a string parameter. Rather than write that message to a queue, however, my lambda expression uses that parameter to update the variable in my test code.

Here’s the code that defines the variable and configures the mock method that updates that variable:

string writtenSO = string.Empty;

Mock.Arrange(() => qc.SendMessage( Arg.IsAny<string>() ))

.IgnoreInstance()

.DoInstead((string jSO) => writtenSO = jSO);

Now that I’ve taken control of my Provider method, I can run my test. I instantiate the Web API controller that holds my method and call the method, passing my dummy SalesOrder object:

SalesOrderProvider sop = new SalesOrderProvider();

IActionResult res = sop.Post(so);

Because of my JustMock code, the JSON version of the SalesOrder object normally written to a queue will now be put into my writtenSO variable where I can check it. My first Assert, then, is to see if that variable is actually a SalesOrder object:

SalesOrder writtenSalesOrder = JsonSerializer.Deserialize<SalesOrder>(writtenSO);

Assert.IsNotNull(writtenSalesOrder);

Putting it all together, the test looks something like this:

public void WriteSalesOrderTest()

{

SalesOrder so = new SalesOrder

{

SalesOrderId = 0,

CustomerId = 112,

OrderDate = DateTime.Now,

SalesOrderNumber = "A123",

ShipDate = DateTime.Now.AddDays(4),

TotalValue = 293.76m

};

QueueClient qc = new QueueClient(new Uri("http://phv.com"),

new StorageSharedKeyCredential("xxx", "bcd=")

);

string writtenSO = string.Empty;

Mock.Arrange(() => qc.SendMessage(Arg.IsAny<string>()))

.IgnoreInstance()

.DoInstead((string jSO) => writtenSO = jSO);

SalesOrderProvider sop = new SalesOrderProvider();

IActionResult res = sop.Post(so);

SalesOrder writtenSalesOrder = JsonSerializer.Deserialize<SalesOrder>(writtenSO);

Assert.IsNotNull(writtenSalesOrder);

}

My tests wouldn’t stop here in real life, of course. For example, I’d probably check to make sure this SalesOrder has the same property values as the SalesOrder object I passed into the Get method; I’d also create tests with various “invalid” SalesOrders to ensure the Provider does the right thing with them. And I’d do a test without mocking SendMessage to make sure I can successfully write to the queue.

But, instead, let’s look at how I can automate testing for the Consumer portion of this pattern.

Testing the Consumer

Azure Functions are set up as static methods on a class. As a result, to call my Azure Function from a test, all I have to do is call the function from the class, passing the two parameters the method expects: the message portion of the queue object that triggered the function (in this case, a JSON version of a SalesOrder object) and some object that implements the ILogger interface.

I’ll create the SalesOrder to pass to the function just as I did with the Provider, but I will add one more line to convert my SalesOrder to JSON:

string jsonSO = JsonSerializer.Serialize(so);

With my mock message created, I can create my mock logger. With JustMock, depending on what I want to test on the logger, I have several ways that I could mock it. I could, for example, track the number of times that any method on the logger is called or capture the messages sent to those methods.

I’ll start with two simpler tests, though: If the code runs as expected, the ILogger’s LogInformation method should be called exactly once and the LogError method should never be called (again, if everything goes right).

To check those conditions, I first use the Mock class’s Create method to generate a mock ILogger object to use as the base for mocking the LogInformation and LogError methods. I then use the Mock class’s Arrange method to specify that I expect the LogInformation method to be called once and the LogError to never be called.

The code to mock my logger ends up looking like this:

ILogger mockLog = Mock.Create<ILogger>();

Mock.Arrange(() => mockLog.LogInformation(Arg.AnyString)).OccursOnce();

Mock.Arrange(() => mockLog.LogError(Arg.AnyString)).OccursNever();

Mocking Database Access

While I now have the parameters to call my Azure function, I also want to take control of the Entity Framework code inside the function so that I can check that the right data is going to the database (plus, I don’t want my test runs to be adding these dummy sales orders to my database). That requires just three steps.

My first step is to create a dummy collection to be used by my DbContext object in place of the real database:

IList<SalesOrder> mockSOs = new List<SalesOrder>();

My second step is to create an instance of the DbContext object that’s used by the function. Once I’ve created it, I have the Mock class return my dummy collection whenever the function uses the SalesOrders collection. I use IgnoreInstance here again because the DbContext object I’m using to create my mocks isn’t the one that my function uses.

Here’s that code:

SalesDb db = new SalesDb();

Mock.Arrange(() => db.SalesOrders).IgnoreInstance()

.ReturnsCollection<SalesOrder>(mockSOs);

The third step is to address the two methods that are called inside the function: The Add method on the SalesOrders collection and the SaveChanges method on the DbContext object itself.

The SaveChanges method is easy to mock: I use the Mock class to tell SaveChanges to do nothing. For the Add method, I’ll have Mock swap in a new set of code that adds the SalesOrder object passed to the Add method to my dummy collection, rather than to the database.

Here’s that code:

Mock.Arrange(() => db.SaveChanges()).DoNothing();

Mock.Arrange(() => db.SalesOrders.Add(Arg.IsAny<SalesOrder>()))

.IgnoreInstance()

.DoInstead((SalesOrder addSO) => mockSOs.Add(addSO));

With all of that arranging done, I’m ready to call my function, passing my mock SalesOrder and logger. After the method finishes running, I’ll check to see if anything has ended up in my dummy collection:

SalesOrderConsumer.SalesProcesser.Run(jsonSO, mockLog);

Assert.AreEqual(1, mockSOs.Count());

When this test is put all together, it would look like this:

[TestMethod]

public void ReadTest()

{

ILogger mockLog = Mock.Create<ILogger>();

Mock.Arrange(() => mockLog.LogInformation(Arg.AnyString)).OccursOnce();

Mock.Arrange(() => mockLog.LogError(Arg.AnyString)).OccursNever();

IList<SalesOrder> mockSOs = new List<SalesOrder>();

SalesDb db = new SalesDb();

Mock.Arrange(() => db.SalesOrders)

.IgnoreInstance()

.ReturnsCollection<SalesOrder>(mockSOs);

Mock.Arrange(() => db.SaveChanges())

.DoNothing();

Mock.Arrange(() => db.SalesOrders.Add(Arg.IsAny<SalesOrder>()))

.IgnoreInstance()

.DoInstead((SalesOrder addSo) => mockSOs.Add(addSo));

SalesOrder so = new SalesOrder

{

SalesOrderId = 0,

CustomerId = 112,

OrderDate = DateTime.Now,

SalesOrderNumber = "A123",

ShipDate = DateTime.Now.AddDays(4),

TotalValue = 293.76m

};

string jsonSO = JsonSerializer.Serialize(so);

SalesProcesser.Run(jsonSO, mockLog);

Assert.AreEqual(1, mockSOs.Count());

In my real test, of course, I’d check for more than there being exactly one object in my dummy collection. (Full code for download is here.)

I can now check all of this into source control and add my tests to my nightly build run. My next step is to start thinking about how I’m going to write the integration tests that will prove all this code works together after it’s loaded into Azure (I’ll probably use Test Studio for APIs for that). But that’s another blog post.

Peter Vogel

Peter Vogel is a system architect and principal in PH&V Information Services. PH&V provides full-stack consulting from UX design through object modeling to database design. Peter also writes courses and teaches for Learning Tree International.